Burrell's first important point (102) is:

A binomial distribution Bin(n, θ) with n large and θ small can be approximated by a Poisson distribution with mean λ = nθ.

What does this mean, and why does it matter?

Burrell is responding to Proot and Egghe's formula for estimating lost editions, and they use a formula for the binomial distribution as their basis for their work. Burrell is restating a basic fact from statistics: For a data set where the number of attempts at success is large and the chance of success is small, the data will tend to fit not only a binomial distribution but also a Poisson distribution, which has some useful features for the kind of analysis he will be doing later. Where a binomial distribution has two parameters, the number of trials and the chance of success (n and θ, respectively), a Poisson distribution has only one parameter, its mean (or λ).

Is n large? In this case, yes, since n is the number of copies of each Jesuit play program that were printed. Although n isn't the same for every play program, it's a safe assumption that it's at least 150 or more, so the difference in print runs ends up not making much difference (as Proot and Egghe show in their article).

Is θ small? In this case, yes, since θ represents the likelihood of a copy to survive. With at most 5 copies of each edition surviving, θ is probably no higher than somewhere in the neighborhood of .02, and much lower for editions for fewer or no surviving copies.

So in these circumstances, can we approximate a binomial distribution with a Poisson distribution? Let's use R to illustrate how it works.

One thing that R makes quite easy is generating random data that follows a particular distribution. Let's start with 3500 observations (or, we might say, editions originally printed), with 250 trials in each (or copies of each edition that might survive) and a very low probability that any particular copy will survive, or .002. In R, we can simulate this as

> x <- rbinom (3500, 250, .002)

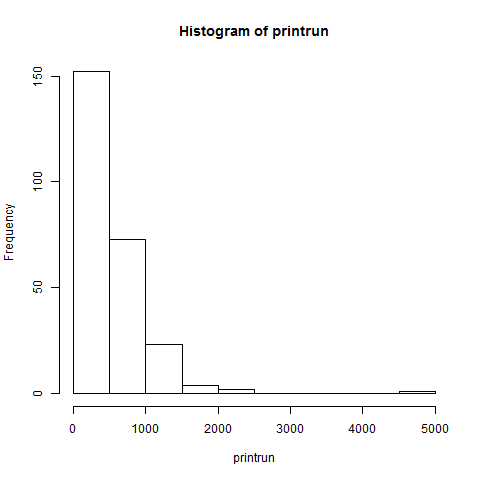

In other words, fill up the vector x with 3500 random points that follow a binomial distribution. What does it look like?

> hist (x)

Now let's compare random points that follow Poisson distribution. We want 3500 data points again, and we also need to provide a value for λ. Since we want to see if λ really is equal to nθ, we'll use 250*.002 or .5 for λ.

> y <- rpois (3500,.5)

Let's see what the result looks like:

> hist (y)

If you look very closely, you can see that the histograms are very similar but not quite identical - which you would expect for 3500 points chosen at random.

Our data sets only have whole-number values, so let's make the histograms reflect that:

> hist (x, breaks=c(0,1,2,3,4,5))

> hist (y, breaks=c(0,1,2,3,4,5))

Let's compare the two sets of values to see how close they are. Whenever we create a histogram, R automatically creates some information about that histogram. Let's access it:

> xinfo <- hist (x, breaks=c(0,1,2,3,4,5))

> yinfo <- hist (y, breaks=c(0,1,2,3,4,5))

Typing xinfo results in a lot of useful information, but we specifically want the counts. We could type xinfo$counts, but we want to view our data in a nice table. So let's try this:

> table <- data.frame (xinfo$counts, yinfo$counts, row.names=c(0,1,2,3,4))

(We gave the table the row names of 0-4, because otherwise the row name for zero observations would be "1," and the row name for one observation would be "2," and so on, which would be confusing.) Typing table results in this:

xinfo.counts yinfo.counts

0 3204 3208

1 252 245

2 37 41

3 6 5

4 1 1

So there you have it. For the material Proot and Egghe are dealing with, a Poisson distribution will work as well as a binomial distribution. Next time: truncated distributions, or what happens when you don't know how many editions you have to begin with.